Publications Sélectionnées

Cette page contient tous les détails à propos d’une liste d’articles sélectionnés. Pour une liste complète de mes articles, veuillez consulter mon profil sur Google Scholar. Les résumés proviennent des articles et sont donc disponibles seulement en anglais pour l’instant.

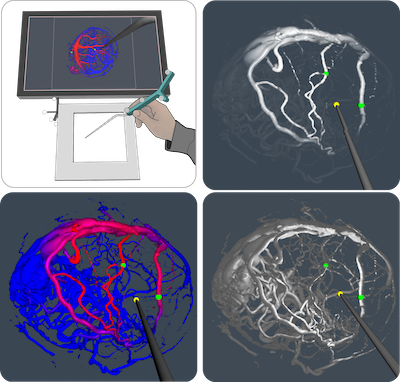

Interaction driven enhancement of depth perception in angiographic volumes

Simon Drouin, Daniel A. Di Giovanni, Marta Kersten-Oertel, D. Louis Collins, IEEE Transactions on Visualization and Computer Graphics, (2018)

User interaction has the potential to greatly facilitate the exploration and understanding of 3D medical images for diagnosis and treatment. However, in certain specialized environments such as in an operating room (OR), technical and physical constraints such as the need to enforce strict sterility rules, make interaction challenging. In this paper, we propose to facilitate the intraoperative exploration of angiographic volumes by leveraging the motion of a tracked surgical pointer, a tool that is already manipulated by the surgeon when using a navigation system in the OR. We designed and implemented three interactive rendering techniques based on this principle. The benefit of each of these techniques is compared to its non-interactive counterpart in a psychophysics experiment where 20 medical imaging experts were asked to perform a reaching/targeting task while visualizing a 3D volume of angiographic data. The study showed a significant improvement of the appreciation of local vascular structure when using dynamic techniques, while not having a negative impact on the appreciation of the global structure and only a marginal impact on the execution speed. A qualitative evaluation of the different techniques showed a preference for dynamic chroma-depth in accordance with the objective metrics but a discrepancy between objective and subjective measures for dynamic aerial perspective and shading.

Interaction in Augmented Reality Image-Guided Surgery

Simon Drouin, D. Louis Collins, Marta Kersten-Oertel, in Book: Mixed and Augmented Reality in Medicine, (2018)

The effectiveness of augmented reality in image-guided surgery visualization depends on its ability to provide the right information at the right time, provide an unambiguous and perceptually sound representation of the preoperative plans, guidance information, and anatomy of the patient, and to ensure the surgeon is not distracted by the visualization. In this chapter, we argue that to reach these goals, it is necessary to systematically consider user interaction in the design of new surgical AR methods. We separate interaction methods for medical AR into three categories, according to the goal of the interaction: control of the navigation system, simplification of a surgical task, and enhanced perception of navigation information. For each of the categories, we analyze the interrelationship between interaction and visualization in the light of human perception. We review the literature of interactive AR techniques applied to image-guided surgery before proposing avenues for future research based on perception issues that have not been addressed in the surgical AR literature.

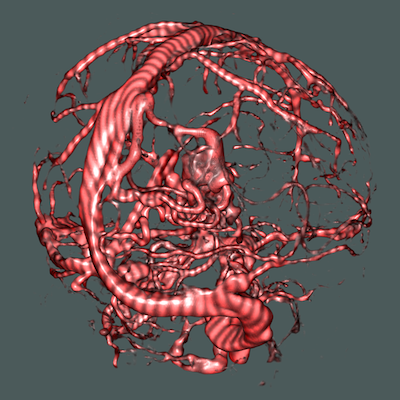

PRISM: An open source framework for the interactive design of GPU volume rendering shaders

Simon Drouin, D. Louis Collins, PLOS ONE, volume 13 (3) (2018)

Direct volume rendering has become an essential tool to explore and analyse 3D medical images. Despite several advances in the field, it remains a challenge to produce an image that highlights the anatomy of interest, avoids occlusion of important structures, provides an intuitive perception of shape and depth while retaining sufficient contextual information. Although the computer graphics community has proposed several solutions to address spe- cific visualization problems, the medical imaging community still lacks a general volume ren- dering implementation that can address a wide variety of visualization use cases while avoiding complexity. In this paper, we propose a new open source framework called the Pro- grammable Ray Integration Shading Model, or PRISM, that implements a complete GPU ray-casting solution where critical parts of the ray integration algorithm can be replaced to produce new volume rendering effects. A graphical user interface allows clinical users to eas- ily experiment with pre-existing rendering effect building blocks drawn from an open data- base. For programmers, the interface enables real-time editing of the code inside the blocks. We show that in its default mode, the PRISM framework produces images very similar to those produced by a widely-adopted direct volume rendering implementation in VTK at com- parable frame rates. More importantly, we demonstrate the flexibility of the framework by showing how several volume rendering techniques can be implemented in PRISM with no more than a few lines of code. Finally, we demonstrate the simplicity of our system in a usability study with 5 medical imaging expert subjects who have none or little experience with volume rendering. The PRISM framework has the potential to greatly accelerate develop- ment of volume rendering for medical applications by promoting sharing and enabling faster development iterations and easier collaboration between engineers and clinical personnel.

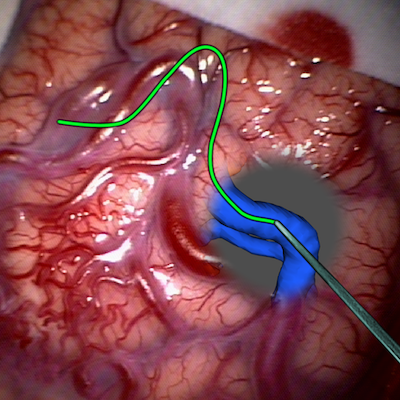

IBIS: an OR ready open-source platform for image-guided neurosurgery

Simon Drouin, Anna Kochanowska, Marta Kersten-Oertel, Ian J. Gerard, Rina Zelmann, Dante De Nigris, Silvain Bériault, Tal Arbel, Denis Sirhan, Abbas F. Sadikot, Jeffery A. Hall, David S. Sinclair, Kevin Petrecca, Rolando F. DelMaestro, D. Louis Collins, International Journal of Computer Assisted Radiology and Surgery, volume 12 (3) (2015)

Purpose: Navigation systems commonly used in neurosurgery suffer from two main drawbacks: 1) their accuracy degrades over the course of the operation and 2) they require the surgeon to mentally map images from the monitor to the patient. In this paper, we introduce the Intraoperative Brain Imaging System (IBIS), an open source image-guided neurosurgery (IGNS) research platform that implements a novel workflow where navigation accuracy is improved using tracked intraoperative ultrasound (iUS) and the visualization of navigation information is facilitated through the use of augmented reality (AR).

Methods: The IBIS platform allows a surgeon to capture tracked iUS images and use them to automatically update preoperative patient models and plans through fast GPU-based reconstruction and registration methods. Navigation, resection and iUS-based brain shift correction can all be performed using an AR view. IBIS has an intuitive graphical user interface (GUI) for the calibration of a US probe, a surgical pointer as well as video devices used for AR (e.g.: a surgical microscope).

Results: The components of IBIS have been validated in the lab and evaluated in the operating room. Image-to-patient registration accuracy is on the order of 3.72±1.27mm and can be improved with iUS to a median target registration error of 2.54mm. The accuracy of the US probe calibration is between 0.49mm to 0.82mm. The average reprojection error of the AR system is 0.37±0.19mm. The system has been used in the operating room for various types of surgery, including brain tumor resection, vascular neurosurgery, spine surgery and DBS electrode implantation.

Conclusions: The IBIS platform is a validated system that allows researchers to quickly bring the results of their work into the operating room for evaluation. It is the first open source navigation system to provides a complete solution for AR visualization.

Interaction-based registration correction for improved augmented reality overlay in neurosurgery

Simon Drouin, Marta Kersten-Oertel, D. Louis Collins, Workshop on Augmented Environments for Computer-Assisted Interventions, volume 9365 (2015)

In image-guided neurosurgery the patient is registered with the reference of a tracking system and preoperative data before sterile draping. Due to several fac- tors extensively reported in the literature, the accuracy of this registration can be much deteriorated after the initial phases of the surgery. In this paper, we present a simple method that allows the surgeon to correct the initial registra- tion by tracing corresponding features in the real and virtual parts of an aug- mented reality view of the surgical field using a tracked pointer. Results of a preliminary study on a phantom yielded a target registration error of 4.06 ± 0.91 mm, which is comparable to results for initial landmark registration reported in the literature.